PiPy package to algorithmically annotate eye-tracking data. Recommended python version: 3.7

GazeClassify provides automatized and standardized eye-tracking annotation. Anyone can analyze gaze data online with less than 10 lines of code.

Exported

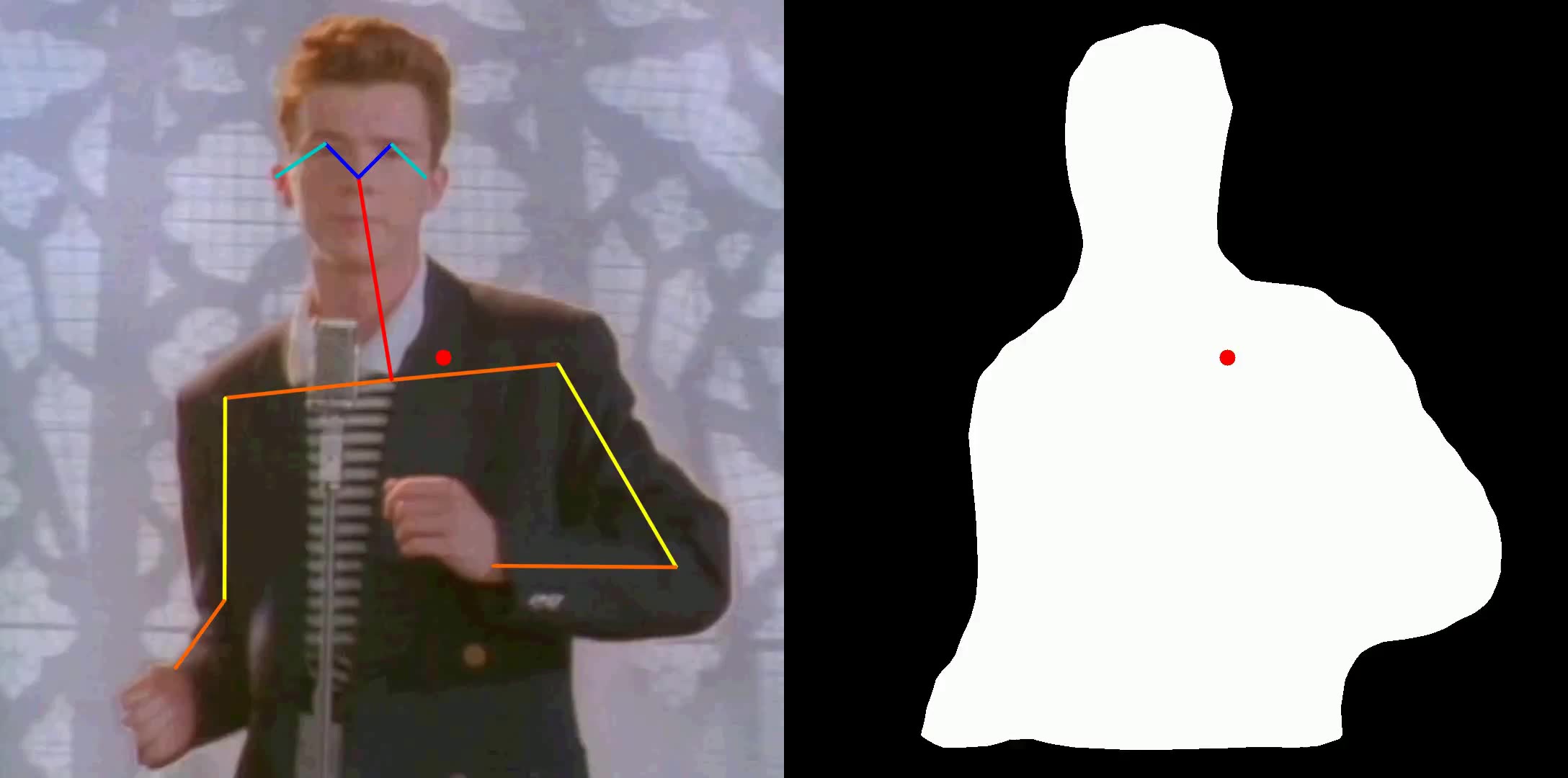

Exported csv will contain distance from gaze (red circle) to human joints (left image) and human shapes (right image) for each frame.

| frame number | classifier name | gaze_distance [pixel] | person_id | joint name |

|---|---|---|---|---|

| 0 | Human_Joints | 79 | 0 | Neck |

| ... | ... | ... | ... | ... |

| 0 | Human_Shape | 0 | None | None |

| ... | ... | ... | ... | ... |

from gazeclassify import Analysis, PupilLoader, SemanticSegmentation, InstanceSegmentation

from gazeclassify import example_trial

analysis = Analysis()

PupilLoader(analysis).from_recordings_folder(example_trial())

SemanticSegmentation(analysis).classify("Human_Shape")

InstanceSegmentation(analysis).classify("Human_Joints")

analysis.save_to_csv()Capture eye tracking data from a Pupil eye tracker. Then, export the data using Pupil software. You will get a folder with the exported world video and the gaze timestamps. Finally, let gazeclassify analyze the exported data:

from gazeclassify import Analysis, PupilLoader, SemanticSegmentation, InstanceSegmentation

analysis = Analysis()

PupilLoader(analysis).from_recordings_folder("path/to/your/folder_with_exported_data/")

SemanticSegmentation(analysis).classify("Human_Shape")

InstanceSegmentation(analysis).classify("Human_Joints")

analysis.save_to_csv()